<PyTorch: Simple Linear Regression Model>

Date: 2023.02.12

* The PyTorch series will mainly touch on the problem I faced. For actual code, check out my github repository.

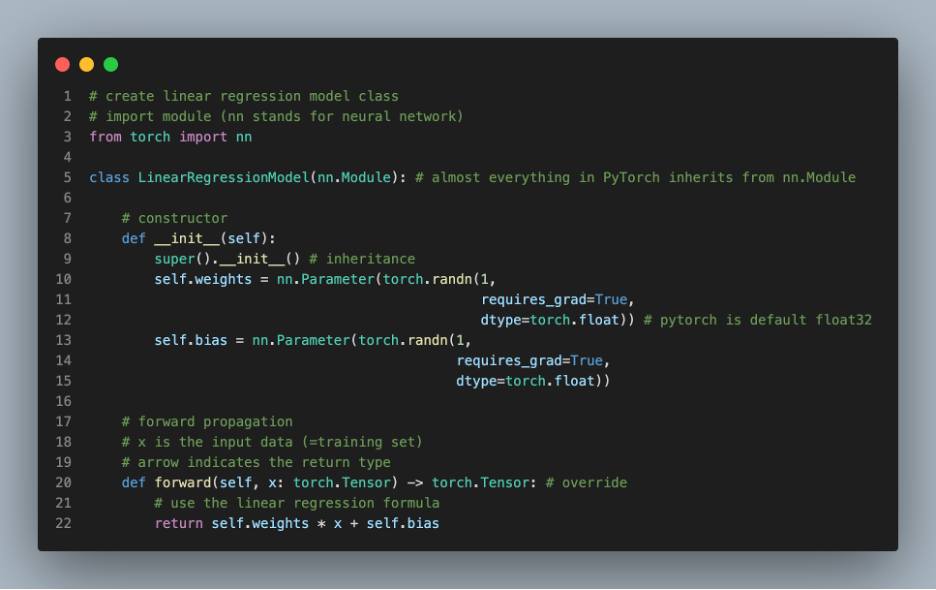

[PyTorch Implementation of Simple Forward Propagation]

* You should know OOP to understand most of the design.

* Quick Code Explanation

Line 5: nn.Module is the Parent Class of LinearRegressionModel. This means LinearRegressionModel is inheriting the nn.Module which contains the necessary building blocks for neural networks. For example, it can use nn.Parameter. nn.Parameter is implemented within the nn.Module.

Line 10~15: The function __init__ is the initialization function in which the field (local) variables are declared or/and initiated.

Line 11: “requires_grad=True” is set so that PyTorch tracks the gradients of this specific parameter to use with torch.nn. Previous posts on building CNN cover gradient descent, but watch the following videos for a short summary. (This video series by 3Blue1Brown is GOATED!)

Line 20: If you explore the nn.Module class, the function “forward” is defined. Thus, we can use the pre-defined function in the parent class. However, if we want to modify the specific implementation of the function, we can override it.

[PyTorch Model Building Essentials]

- torch.nn – contains all of the buildings for computational graphs (a neural network can be considered a computational graph)

- torch.nn.Parameter – what parameters should our model try and learn, often a PyTorch layer from torch.nn will set these for us

- torch.nn.Module – the parent class for all neural network modules, if you subclass it, you should overwrite forward()

- torch.optim – optimizing the parameters (i.e. gradient descent)

I’m taking linear algebra and program design classes right now. These two classes are directly related to the fundamentals of neural networks. Linear algebra is the mathematical foundation of matrices, and program design (aka object-oriented programming) is crucial in using modules and designing large-scale applications.

I will touch on my returning college experience and the recent transitions.

'Tech Development > Computer Vision (PyTorch)' 카테고리의 다른 글

| PyTorch: Loss Functions & Optimizers (0) | 2023.04.23 |

|---|---|

| PyTorch: parameters() & state_dict() (0) | 2023.04.23 |

| PyTorch: CPU vs GPU (0) | 2023.04.23 |

| PyTorch: Avoiding Dimension Problems through Basic Operations (0) | 2023.04.23 |

| PyTorch: The Fundamentals & Applications in CNN (0) | 2023.04.23 |

댓글