<PyTorch: Avoiding Dimension Problems through Basic Operations>

Date: 2023.01.18

* The PyTorch series will mainly touch on the problem I faced. For actual code, check out my github repository.

[A Few Techniques to Avoid Error]

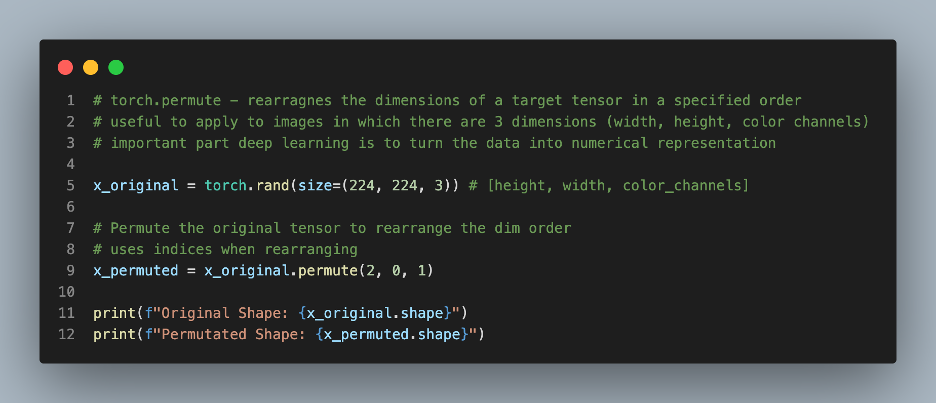

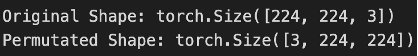

A crucial part of deep learning is to turn data into numerical representations. For example, an image can be represented as a 3-dimensional tensor in which each dimension is sized [224, 224, 3]. Since tensors represent many dimensions, we use pytorch’s built-in functions to modify tensor shape, size, and order. These techniques are critical because the basic errors usually come from incompatible sizing.

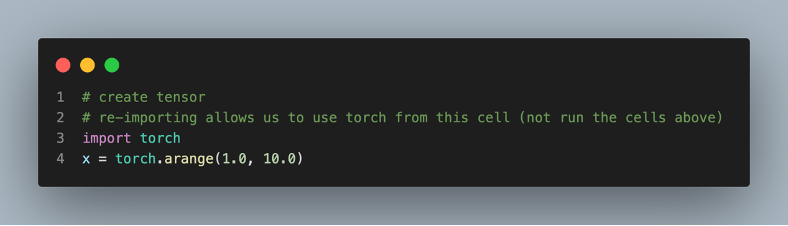

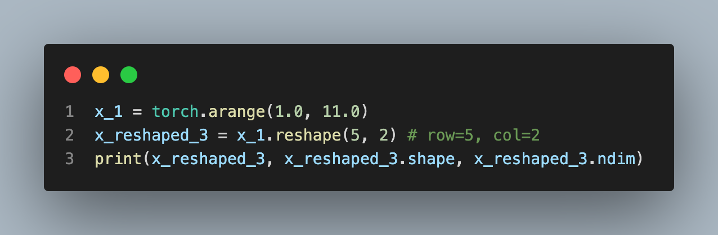

[Reshaping]

Reshaping is a technique to change the shape of the tensor. However, it requires shape compatibility. The new shape requirements should be able to represent the original tensor without omitting any values. Let’s see an example.

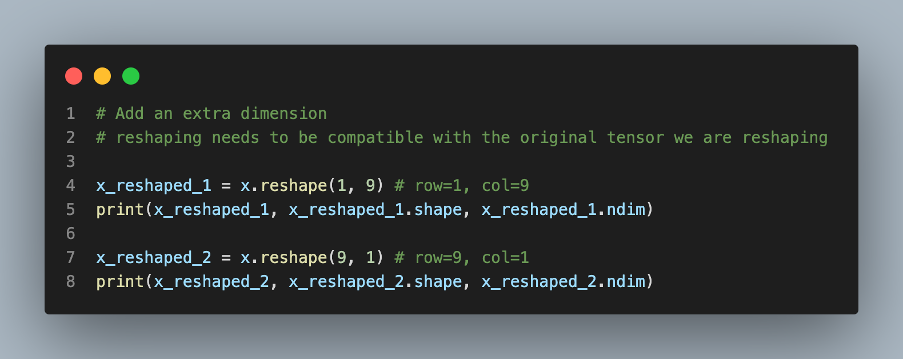

In the figure above, either the row or column value corresponds to the original size of the tensor, 9. Thus, size compatible. The interesting reshape is line 7. Each value becomes a row of itself.

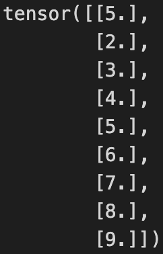

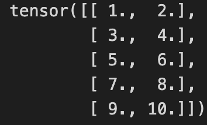

Let’s see another example of 10 elements in the original tensor.

Reshaping is quite intuitive. However, be careful of the size compatibility since pytorch doesn’t automatically delete elements but rather re-orders the elements by the given size cap.

[View]

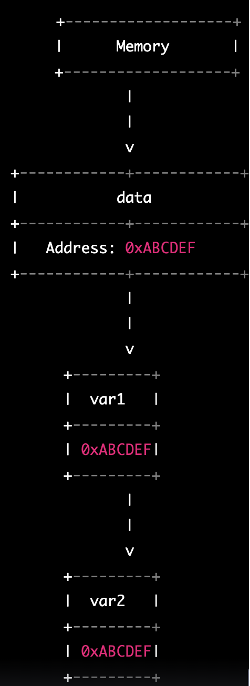

View is confusing at first. View allocates a particular variable to the same memory address as another variable. Thus, the variables that share the same memory address will have the same value stored in the memory. Further, the variable's value is stored in the memory, not the variable. The variable is simply a reference name to access the memory space which has been allocated when we declare a variable.

By applying the view function in pytorch, we can access the data with different shapes or sizes without copying the data. The shape or size compatibility must meet. Typically view is used to see some data from different shapes or size perspectives without modifying the original data or creating a copy of it.

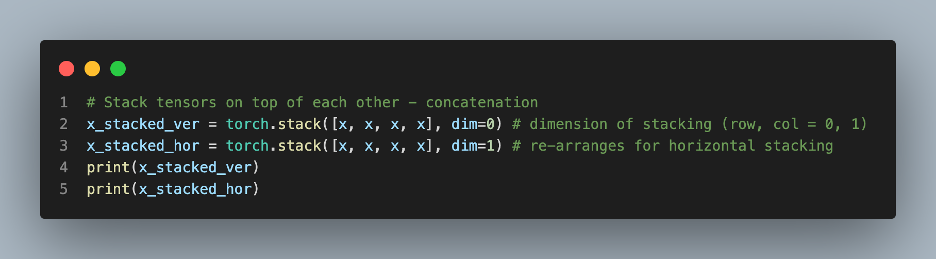

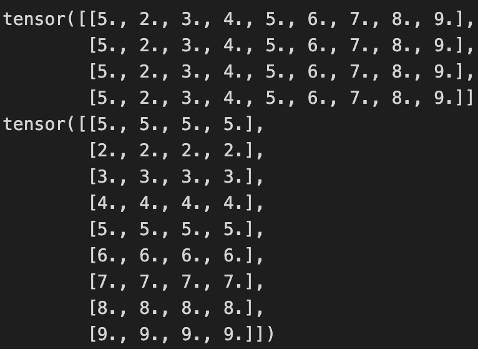

[Stacking (aka Concatenation)]

Stacking is the concatenation of matrices. It is similar to concatenating tables using pandas or numpy. Since we are dealing with tensors, we can concatenate tensors from different dimension perspectives.

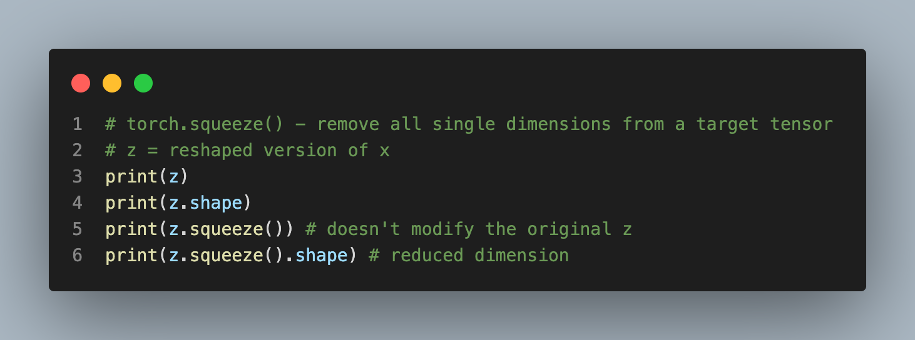

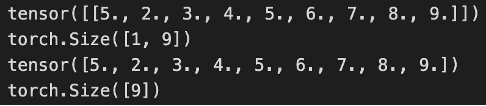

[Squeezing]

Squeeze manipulates the dimension.

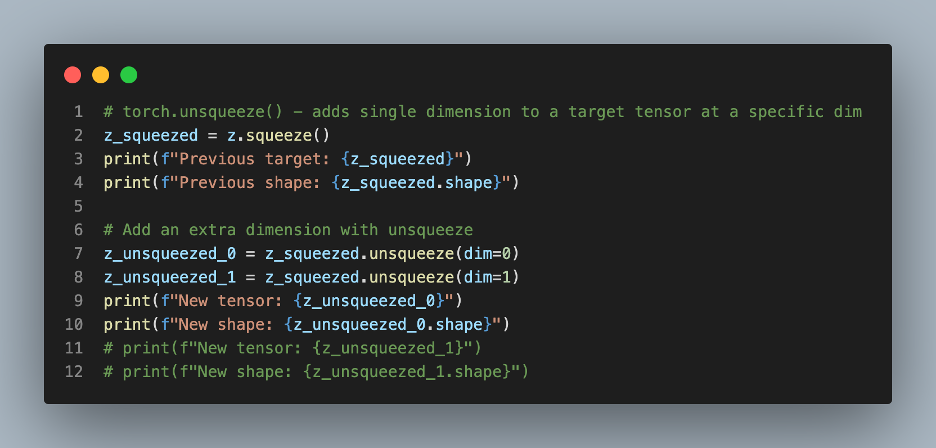

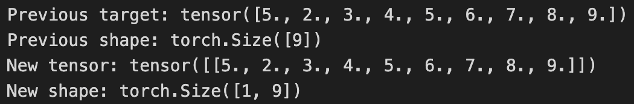

Unsqueeze adds a layer of dimension.

Squeeze and unsqueeze operations are straightforward. Using them while building the CNN to see practical applications is best.

[Permute]

These are essential techniques when we preprocess data since the size, shape, and indices match for a successful neural network.

Side Note: Working with PyTorch and reviewing neural network models from a mathematical perspective is much more intuitive and easier compared to when I first started building it before this Spring semester back at school. Taking linear algebra and program design classes does help with the overall programming experience!

'Tech Development > Computer Vision (PyTorch)' 카테고리의 다른 글

| PyTorch: CPU vs GPU (0) | 2023.04.23 |

|---|---|

| PyTorch: The Fundamentals & Applications in CNN (0) | 2023.04.23 |

댓글