An Intro to Natural Language Processing

Date : 2022.10.28

*The contents of this book is heavily based on Stanford University’s CS224d course.

[Welcome to NLP]

Natural Language Processing (NLP) is basically making computers understand the human language. The idea is quite simple, but the methods may not be..

Our language is constructed with letters and the definition (meaning) which lies within words. Words are the standard unit for carrying a meaning. Thus, we need to make machines understand “words.”

Let’s explore methods to represent words so machines can understand them.

- Thesaurus Method : Use a human made dictionary.

- Statistical Method : Use statistical information of word distributions.

- Inference Method : Known as word2vec.

[Thesaurus Method]

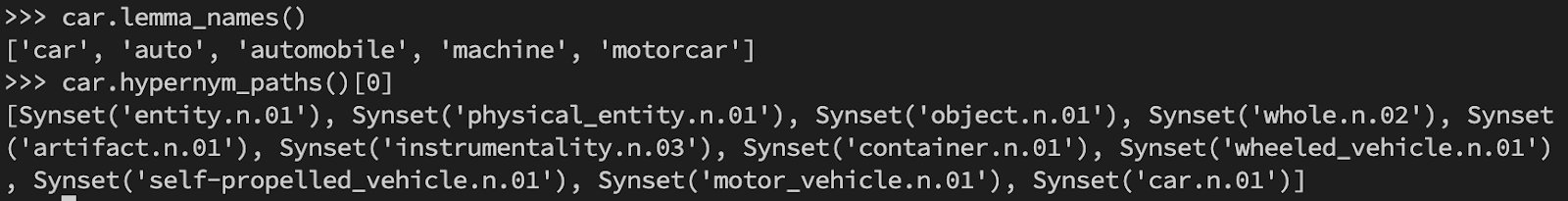

One of the most famous thesaurus is WordNet built by Princeton University. WordNet gives access to not only a “word - definition” link, but a word network.

[WordNet & NLTK]

Natural Language ToolKit (NLTK) is a library for WordNet. Let’s have a taste.

Basically, whatever doc you read on https://www.nltk.org/install.html will misdirect you. This is what I did to successfully download the nltk package.

After downloading all the files, the nltk modules will work like a ‘charm.’ Let’s play around with some functions.

The list of words in the word graph go from abstract to explicit. The graph, or path, is represented in the form of a list because there may be more than one path or parent node for each level of abstraction. We can also calculate the similarity, or proximity of different words.

Although WordNet and applications of thesaurus method seems to work, it has a major flaw. Humans need to manually label and build the network. This is not only time consuming, but a waste of human resources and vulnerable to change.

[Statistics Based Method]

Another approach is statistical processing and neural networks. The combination of the two will allow computers to understand ‘words’ from reading big data. From now on, we will use a corpus, which is a bundle of words. Corpus is human created, which means it contains how humans write, how humans select words, and what words mean. The goal of the statistical method is using a program to effectively extract human knowledge of the language.

Let’s use a few python techniques for preprocessing our data.

The first step to understanding words is representing them in ‘computer’ ways. We use distributional representation which is representing words as VECTORS.

Over the years of NLP study, researchers have come to agree on the distributional hypothesis which is the foundation of NLP study. Distributional hypothesis states that ‘a word’s definition is created by the context (or surrounding words).’ Thus, we not only care about the word itself, but its relationship with the context.

Let’s set the window size to 1 and create a frequency matrix for each word.

The purpose of creating a co-occurrence matrix is to represent the relationship between different words. Similarity is a way to measure the relationship. The cosine similarity will scale the relationships.

The cosine similarity gives a scale to check the similarity between vectors. If two vectors have the same direction the similarity is 1, and if two vectors have opposite directions, the similarity is -1.

Applying cosine similarity to the co-occurrence matrix we can extract the top 5 similar words given a query (the word we are searching).

댓글